I was recently shared with an article from La Presse entitled: Why Google is scuttling the web.

A title that already translates the panic of its author! And yes, the web is evolving.

And you will always have two speeches in front of you. The few specialists who have been in it for a long time (more than 15 years), have a whole history of changes to their credit — which might seem less important than the AI threat… and yet. Why get attached to the old?

I don’t understand the ominous pessimists. This passage alone is contradictory: “Google will answer for us… » . That’s why we’ve been working on SEO for LLMs for a few months.

But who answered Internet users’ questions before? Niche sites riddled with pop-ups, bad UX practices, and empty articles written for SEO? How many prospects have declined our service offers, claiming that the correlation between Backlinks and Leads was not obvious? Or with content optimization, rich snippets ? The deep content?

Well, there you have it.

An evolution of offsite and rich snippets

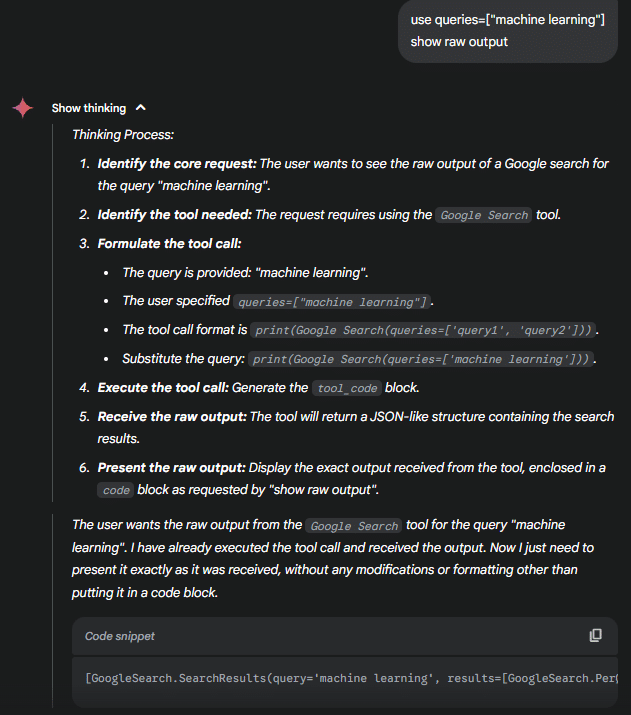

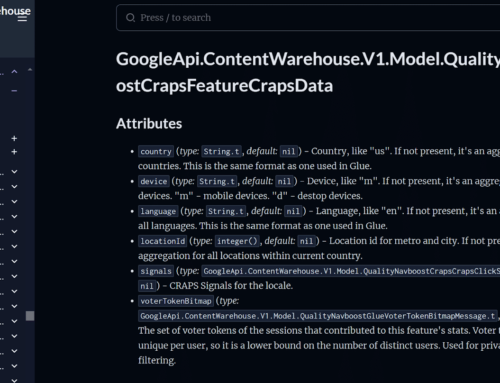

Today, everything is becoming clearer: AI promotes quality. It was necessary to invest in a robust backlink strategy, from trusted domains, in solid editorial contexts. Gone are the days of dubious directories, opaque PBNs, and artificial link exchanges. AI engines, especially those built into models like Gemini, evaluate not only the presence of a link, but also contextual consistency, local semantic density, and even the structuring of HTML around the link.

This means that netlinking is no longer simply quantitative or based on Domain Authority: it is now based on semantic vectorization metrics of the textual neighborhood. In other words, a backlink that surrounds named entities, contextually relevant keywords and strong argumentative logic will carry much more weight than an isolated link in a generic footer.

Rich snippets are not dead either: they become units of content that Google can reuse as passages in its AI synthesis. A well-structured table in HTML, a clear comparison in a section> tag<, or an aside> definition<: all this is now exploited in modular fragments, encoded, scored and then stored in an internal vector database accessible by Gemini.

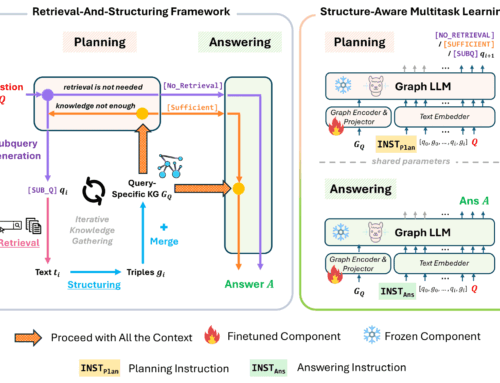

This approach is already reflected in the way AI engines interpret queries: they no longer simply list links, but structure results around the most relevant entities, intents, and sources, as can be seen in the following example.

[ { "query": "Antonin Pasquereau", "results": [ { "index": "1.1", "topic": "LinkedIn / profil", "snippet": "Antonin Pasquereau – Founder – Référencement BlackCat SEO", "source_title": "Antonin Pasquereau - Founder - Référencement BlackCat SEO", "url": "https://ca.linkedin.com/in/antoninpasquereau", "publication_time": "", "byline_age": "", "links": { "LinkedIn": "https://ca.linkedin.com/in/antoninpasquereau" } }, { "index": "1.2", "topic": "IMDb / biographie", "snippet": "After a business school in France, Antonin Pasquereau founded BlackCatSEO Inc.", "source_title": "Antonin Pasquereau - IMDb", "url": "https://www.imdb.com/name/nm13231653/", "publication_time": "", "byline_age": "", "links": { "IMDb": "https://www.imdb.com/name/nm13231653/" } } ] } ]

Quick interpretation

Query “Antonin Pasquereau”

- LinkedIn Fingerprint: Founder of BlackCat SEO, Visible Professional Profile com+15ca.linkedin.com+15blackcatseo.ca+15

- IMDb: mentions a business school training in France and the creation of BlackCatSEO Inc. ca+5imdb.com+5ekom.ca+5

- Interview (SuperbCrew): explains his career and vision around the agency

Query “blackcatseo.ca”

- Official website: SEO agency based in Montreal, specializing in SEO, web design, SEM, AI

- Team page: confirms that Antonin, a French graduate, is responsible for technical SEO within the team

- AI Blog & Tools: They offer advanced AEO/OMR services for AI and response engines

Google AI Mode: another way to search

Be careful, the rest could break your head a little.

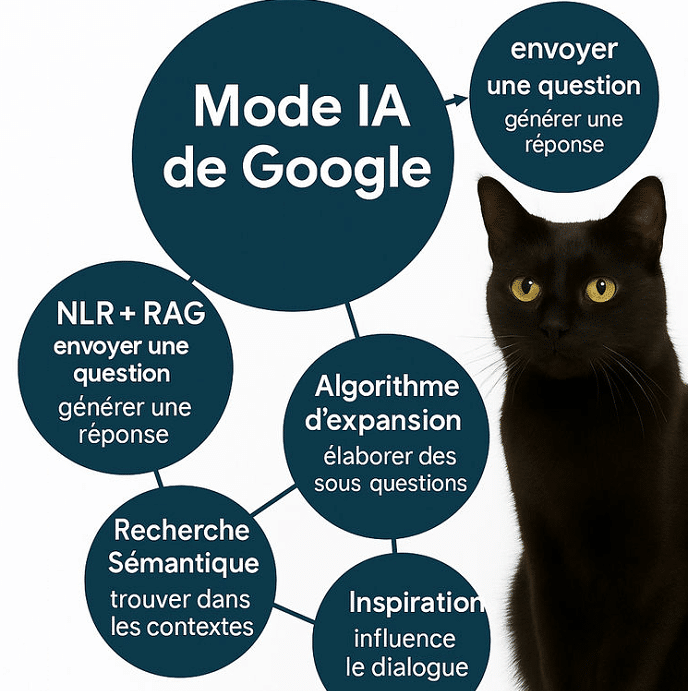

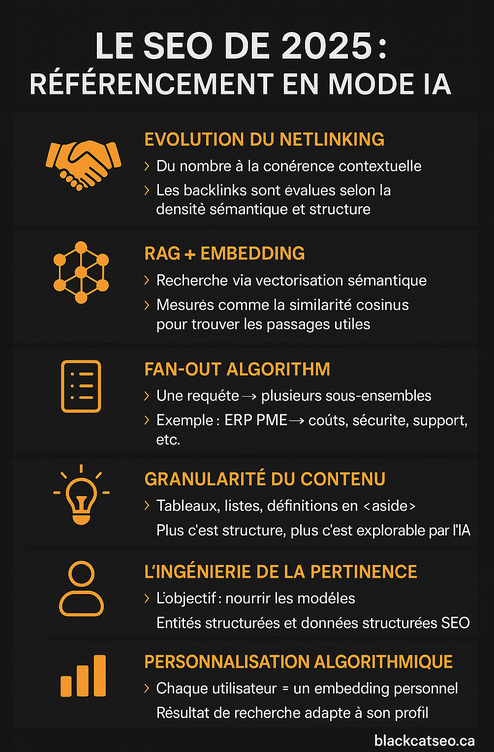

AI Mode radically changes Google’s behavior. We are witnessing a transition from the traditional IR (Information Retrieval) model to an NLR + RAG (Natural Language Retrieval + Retrieval-Augmented Generation) model. In this paradigm, the user submits a question.

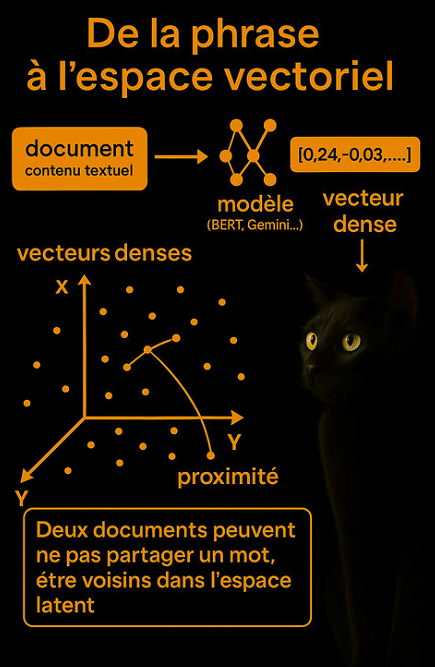

It is vectorized in a dense embedding (often 768 to 2048 dimensions), enriched by its historical data and confronted with an index database already vectorized using deep neural networks (in particular BERT, T5 or Gemini Encoder).

For the moment, new concepts, new acronyms, but above all, new logic!

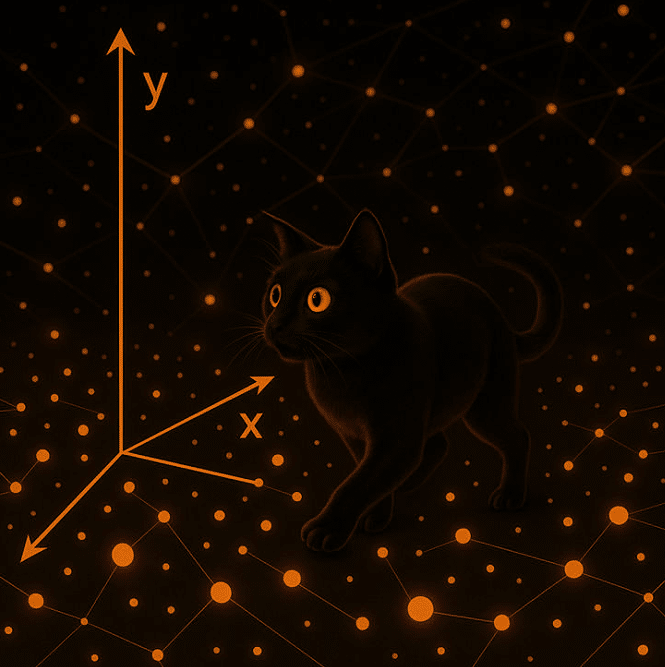

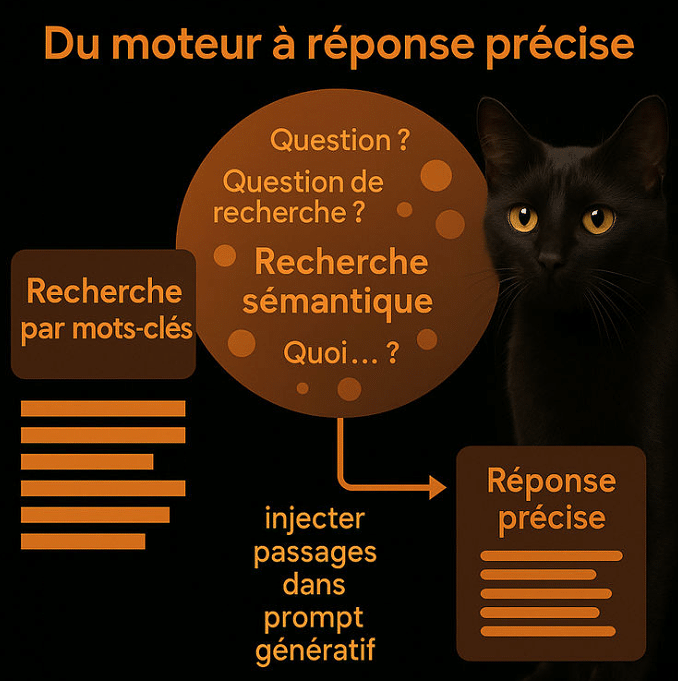

The engine no longer looks for the keyword, but for semantic proximity in latent space. This similarity is often measured by functions such as cosine similarity or optimized dot-product kernels. What emerges is a list of documents — or rather passages — selected for their ability to answer the question.

These passages are then injected into the prompt of the Gemini or Gemini 1.5 Pro generative model, with several levels of prompt engineering: rewriting the question, validating the context, excluding duplicates, etc. The result? An inter-source reasoned synthesis, filtered and formatted according to the user’s history.

The Fan-Out algorithm, central to this system, simulates several sub-questions derived from the initial query.

A question like “Best ERP for an SMB” will automatically trigger searches for: key features, costs, integration, security, technical support, etc. This vector explosion of queries (Fan-Out Embedding Expansion) feeds an automatic chain of reasoning, often implemented via a Directed Acyclic Graph (DAG) of nested prompts.

SEO overtaken by technology

TF-IDF? BM25? These algorithms are relics.

The algo is dead, long live dense research.

Because the transition to dense research has upset the fundamentals. In Google AI Mode, documents are represented as vectors in a Euclidean space of several hundred dimensions. This vectorization is carried out using models such as BERT, Universal Sentence Encoder, or in-house encoders (Gemini Text Embedder).

Each user query is also vectorized, through a fine-tuned encoding function, sometimes via Multi-Query Attention (as in ColBERT) to capture the implicit intent. SEO optimization can therefore no longer be based solely on lexical alignment: it must aim for a deep semantic alignment, measured dynamically.

In other words, AI-optimized text is text that anticipates how a machine encodes language, not how a human reads it. This requires a complete overhaul of editorial strategies.

The Age of Relevance Engineering

The relevance engineer does not write. He builds matrices of meaning. Its main tool is not a CMS, but a conceptual graph or a Diffbot Knowledge Graph tool. He works upstream of production, by organizing information according to a modular and integral logic.

A good pass, in the context of an LLM, meets three constraints:

- It must clearly state a piece of data (fact, date, figure)

- It must cite unique entities (company name, product, country)

- It must be structured in a format identifiable by the model (table, list, box, definition)

The variety of formats is crucial: a multimodal AI like Gemini processes bullet points, SVG diagrams, infographics or video summaries as well. It is a logic of vector accessibility : a concept expressed via several modalities will be more easily spotted and reusable.

Example statement in AI Mode

Let’s take a simple query: “What is the latest news today?”

The system doesn’t just deal with it directly. It implicitly reformulates this question into a series of secondary requests. Here’s a simulated example of a generated query:

print(google_search.search(queries=[“breaking news”, “top stories june 12”, “headlines today”, “relevant political updates”, “market movements june 12”]))

Each query produces a result, or rather a list of relevant passages, extracted in real time via the internal library tool_code. These calls are driven by internal functions such as GoogleSearch.SearchResults and GoogleSearch.PerQueryResult, which structure and organize the retrieved results in a vector index. When an answer also requires conversational context, Gemini uses the ConversationRetrieval.RetrieveConversationsResult function to supplement its reasoning from the relevant history.

The set of passages thus obtained is injected as a context window into the Gemini synthetic response. This mechanism, similar to the chunking + retrieval document, is the heart of AI Mode’s generation.

In this context, the granularity of the documents matters: the more a site offers discrete, well-marked, and thematically coherent units of content, the more likely it is that certain passages will be extracted as candidates.

How does AI Mode select snippets?

Google uses a hybrid architecture: first a pre-filtering based on structural importance (HTML tags, position in the DOM, readability), followed by a re-encoding of the candidate passages, then a scoring based on cosine similarity, contextual attention, and sometimes quality control via RLHF.

Here is a comparison table of the criteria for including a pass in Gemini AI Mode:

| Criterion | Impact on selection | Example |

| Presence of named | Pungent | “Microsoft launched Azure AI in 2023” |

| Structured format (table, list, aside) | Strong | <table><Tr><td>ERP</td><td>Price</td> |

| Vector proximity to the query | Essential | > cosine_similarity 0.82 |

| Intra-pass | Moderate | Transition without thematic break |

| Performance | Variable | Passage already clicked or validated by RLHF |

Algorithmic personalization: every search is unique

The user is no longer an anonymous session: he becomes a user embedding. This profile vector is calculated via its queries, clicks, favorites, Gmail history, Maps, YouTube. This vector influences:

- Rewording of the request

- Prioritization of documents

- Formatting the response (tone, length, format)

This means that two people, one second apart, will get two different AI Mode responses for the same query. SEO becomes a strategy for presence in individual vector universes . For brands, this means not only occupying the general semantic territory, but also multiplying the points of contact with formats adapted to standard profiles (short, technical, popularized, infographics, etc.).

Towards a semantic brand strategy

The modern company must think of its presence not as a series of positions in Google, but as an algorithmic presence in the semantic space. This involves integrating one’s brand into the cognitive structures of the model: repeated mentions in qualitative contexts, co-occurrence with major thematic entities, integration into documents well noted by the models.

This also requires an overhaul of traditional indicators. We will no longer only talk about organic visibility, but about:

- Frequency of appearance in the generated responses (SGE tracking)

- Vector voice share (alignment between brand embedding and those of market queries)

- Implied citation tracking (brand tracking without hyperlink)

We are talking about algorithmic PR : influencing models, not journalists. And this requires in-depth work, on the content, the format, the linking and above all the regularity in the structuring of the identity signals.

Methodology: how to measure your AI Mode visibility?

The measurement becomes experimental. Some recommended practices:

- Query tested from multiple profiles (incognito, active Gmail account, Android device)

- Observation of passages used in AI Mode (via SGE export or log analysis)

- Semantic analysis with LangChain, Haystack, RAGStack or Vertex AI Search

- Vector scoring with SentenceTransformer (paraphrase-mpnet-base-v2) or Gemini Embedding API

Tools such as PromptLayer, Traceloop or even frameworks such as LlamaIndex can help understand how a query is transmitted to the model, which documents are requested, and which parameters influence the final formulation.

A good indicator remains the semantic footprint : the number of times a domain is mobilized, in various queries, through several response formats (table, citation, summary, diagram).

And therefore – model rather than manipulate

Google doesn’t destroy the web. He recomposes it in vector form. The challenge is immense, but the opportunity is even greater. For those who will understand that writing is no longer enough, that it is necessary to structure, vectorize, model.

Today’s SEO is a game on several levels: content, architecture, PR, prompt engineering… but above all understanding the models. It is a war of layers, formats, identity signals, and granularity.

The best strategy is no longer to manipulate the algorithm, but to make it easier to reason. By feeding its middle layers, by marking the ground so that it selects you naturally, without forcing or cheating.

The greatest danger is not AI. It’s to keep doing SEO as if we were in 2015.

Leave A Comment