A Leaked Document, an Open Window on How Google Works

It was an unprecedented event that we couldn’t miss. The leak of some 3000 internal documents related to Google’s services is a major event in the world of SEO, EVERYWHERE in the world! Our BlackcatSEO agency has had the time to analyze this information in depth, in order to better understand the obscure workings of Google – but also, to validate if our techniques are suitable for our clients.

It is also a good source of information to validate the processes we develop for our SEO Prediict application!

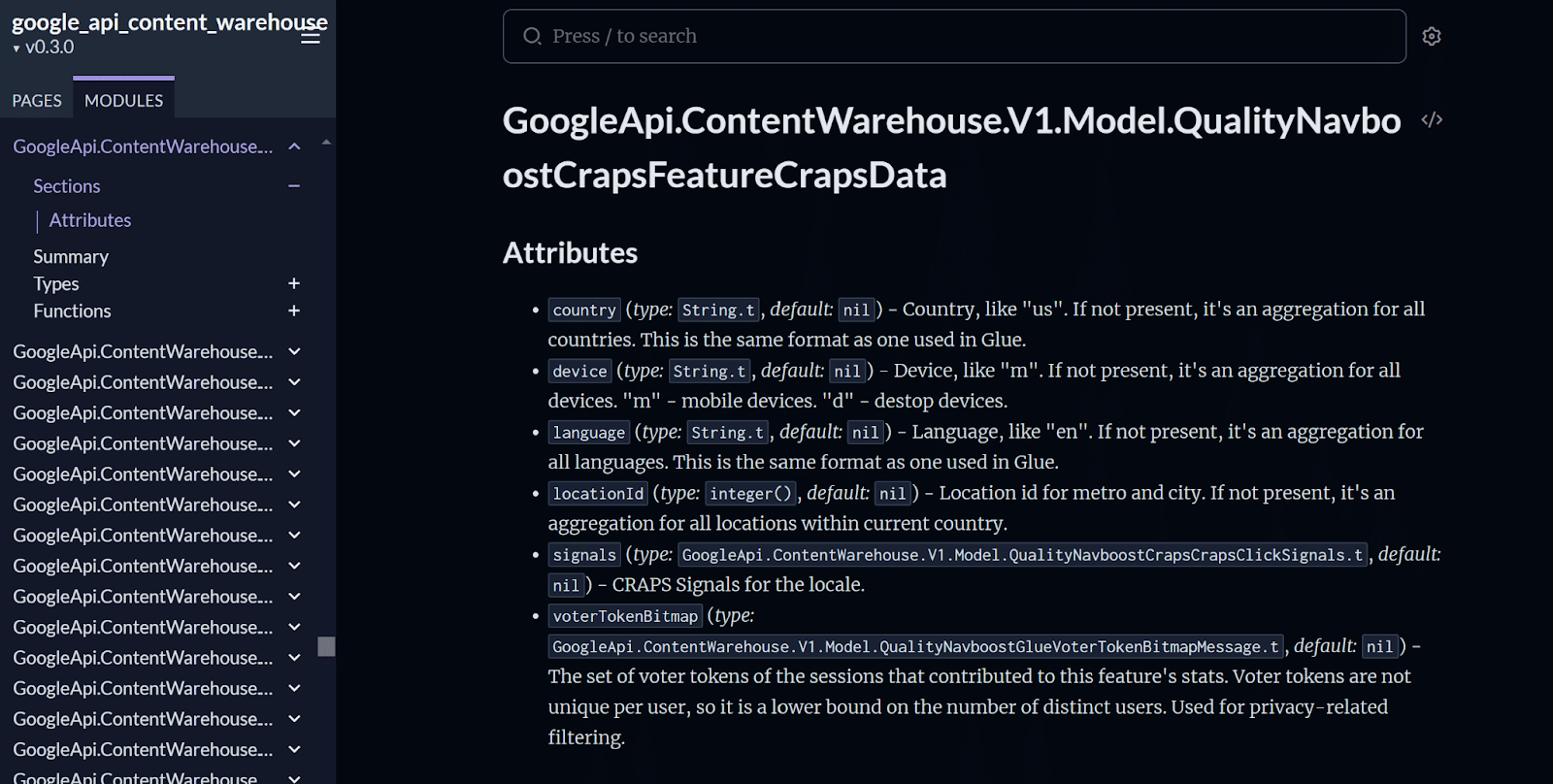

Basically, it’s an incredible opportunity to test ourselves. And why? Natural referencing (SEO) is based on complex algorithms where every factor, no matter how small, directly influences the visibility of a site. Search engines such as Google rely on a multitude of criteria to evaluate the quality of pages, whether it is their content, the structure of internal links or user interactions. Thanks to the recent leak of the GoogleApi.ContentWarehouse.V1 document, we now have access to previously inaccessible details about the inner workings of content indexing at Google. This document, both a technical tool for engineers and a manual for managing content data, reveals crucial information about algorithms, filtering rules, metadata management, and much more. In this article, we’ll explore this information, enrich it with advanced mathematical and algorithmic concepts, and illustrate it with real-world examples from previously completed SEO projects.

The Importance of Algebraic Structures

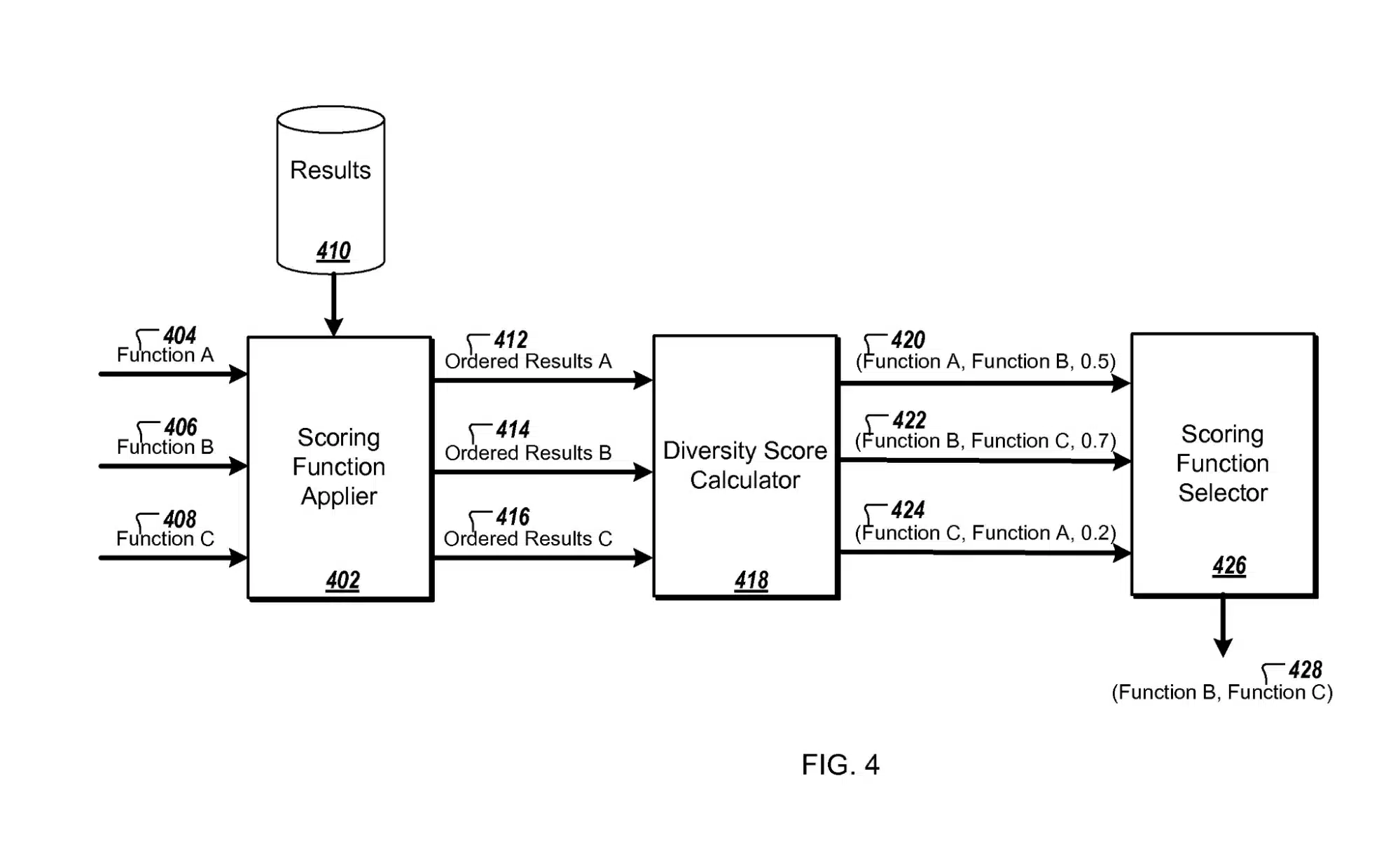

Well, first of all, we must remember a patent, which the results of the SERPs remind us, it is obvious that Google activates different ranking systems according to the sectors (for example, travel, commerce, etc.). The patent entitled Framework for evaluating web search scoring functions is a perfect example of how Google can perform several ranking functions at the same time and decide, like a big company, once the data has been processed, which ones to display in the results. But, it is true that we still lack information about internal processes to predict exactly what happens in each specific case.

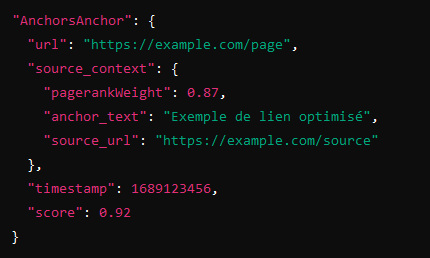

One of the central concepts of the GoogleApi.ContentWarehouse.V1 document is how Google handles internal and external links. The PageRank algorithm is at the heart of this management, but it is enriched with new variables that make it possible to refine the evaluation of the relevance of pages. Here’s an example of how Google evaluates an internal link:

This piece of code has an internal link with a pagerankWeight of 0.87, which means that the source page has a significant weight in terms of authority. This score is also influenced by the context of the anchor (source_context), including the anchor text (anchor_text) and the source URL. The document reveals that the authority of a page is partially transferred through these links. For Boutique Les Garçons, this approach can be applied by using internal links from the most visited pages, such as hand-dyed thread pages, to transfer authority to lesser-known pages, improving their visibility.

Anchor Evaluation Algorithms

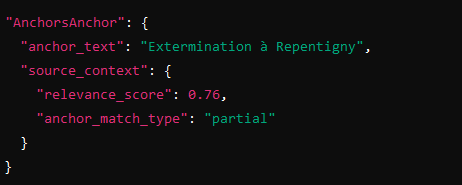

The importance of anchors in the structure of internal links is not limited simply to their presence, but to their contextual relevance. The evaluation model presented in GoogleApi.ContentWarehouse.V1 is based on the ability of a link to reinforce the contextual understanding of one page compared to others. Here’s an excerpt from the Anchor Management algorithm:

This example shows how Google assigns a relevance_score of 0.76 to a link based on the fuzzy match between the anchor and the page content. Applying this logic to the Abasprix Extermination project, where local SEO for Repentigny and Laval is optimized by internal linking, anchors like “Pest Extermination in Repentigny” or “Exterminator Laval” can be used to maximize authority and local relevance, thus strengthening the relationship between pages and their content.

Algebraic Modeling of Content Restrictions and User Experience

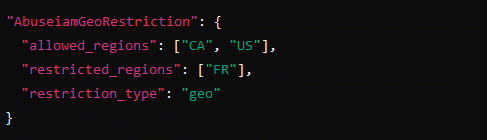

In GoogleApi.ContentWarehouse.V1, Google uses dynamic filtering models based on geo-restriction rules to improve the user experience. The AbuseiamGeoRestriction module allows you to restrict access to content according to the geographical location of users. This method is based on a conditional filtering algorithmic logic:

This code illustrates how Google determines which regions are allowed to access certain content, here restricted for France (FR), but accessible for the United States and Canada. In a local SEO strategy, such as the one applied to Clark Influence, this could be used to personalize content according to regions, thus strengthening the local relevance of the pages.

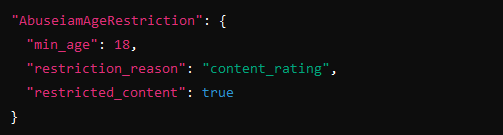

Managing Age Restrictions and Sensitive Content

Similarly, the document includes tools for managing age restrictions, based on the AbuseiamAgeRestriction module. This example shows how Google implements specific rules to filter underage users from content that is deemed sensitive: By applying this logic to an e-commerce site that offers sensitive products to a specific audience, it is possible to refine the viewable content according to the age of the users, as could be relevant in a restricted content strategy.

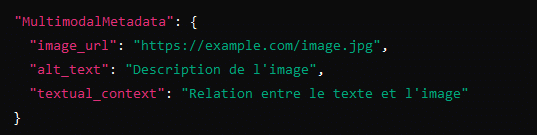

The Importance of Metadata and Contextual Fields

Metadata optimization, as explained in GoogleApi.ContentWarehouse.V1, plays a crucial role in indexing pages quickly and efficiently. Poorly structured metadata can lead to incomplete indexing, directly affecting the visibility of pages in search results. Here’s an example from the document about using metadata to improve indexing:

In an SEO strategy that includes rich content, such as for Volthium, the use of multimodal metadata could significantly improve the indexing of complex product images, allowing Google to better understand the relationship between the product and its technical specifications.

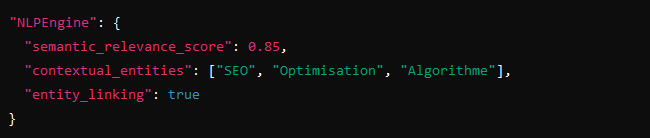

NLP and Semantic Algorithms: The Future of Optimization

Natural Language Processing (NLP) is a technology that is increasingly being used by Google to understand the context and intent of searches, beyond simple keyword matches. The GoogleApi.ContentWarehouse.V1 document shows that Google relies on machine learning models to assess the semantic relevance of content. Here is an example of the built-in NLP algorithm:

In this snippet, a semantic relevance score is assigned to a page, based on the presence of contextual_entities that reinforce the meaning of the text. This concept could be applied to projects like Maloi25, where compliance with Law 25 requires a fine-grained and contextual understanding of the text, thus strengthening keyword accuracy and semantic relevance for SEO.

A Technical Vision for Sustainable Optimization

The GoogleApi.ContentWarehouse.V1 leak offers a rare opportunity to deeply understand Google’s inner workings, and adapt these techniques to more advanced SEO strategies. By integrating these concepts into your own projects, such as Volthium, Fissure Experts, or Lelili Fleurs, you can not only improve your internal linking and indexing, but also anticipate future SEO trends. The technical approach, including optimization algorithms, user interaction analysis, and the use of multimodal metadata, can give your site a significant competitive advantage, while adhering to SEO best practices established by Google.

That’s why we are one of the top SEO agency in Montreal !

Leave A Comment