What if your site suffered a 50% decrease in its visibility on Google… Not because of your backlinks or keywords, but rather because of a content limitation that you didn’t even anticipate?

Is content security your blind spot?

Whether it’s AI and LLMS, or Google, there is an increasing focus on security and control of content restrictions. The dissemination of sensitive or inappropriate content can directly impact the reputation of a site and therefore its SEO. Google’s algorithms have improved to handle these problems, for example with moderation and age or geo-restriction systems.

Content Restrictions at the Heart of SEO

The documents unveiled by GoogleApi.ContentWarehouse.V1 provide insight into how Google handles content security and access restrictions, whether it’s age, geolocation, or offensive content. This article will look at why it’s essential to know how to manage these limitations, and how proactive management can not only avoid penalties, but also boost your site’s domain authority and visibility.

Managing Content Restrictions in GoogleApi.ContentWarehouse.V1

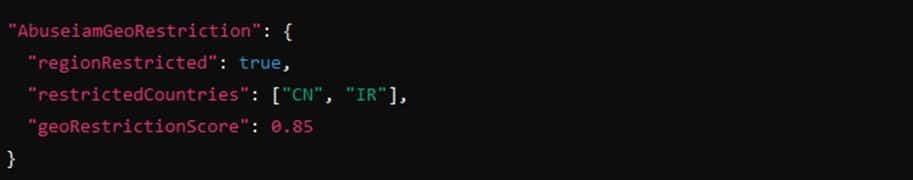

One of the key tools cited in the document is AbuseiamGeoRestriction, which allows access to certain pages or parts of a site to be restricted based on the geographical location of visitors. This means that some content may only be accessible in certain parts of the world, due to local law or site policies.

Here is an excerpt of how this system works:

In this example, content is restricted in countries such as China (CN) and Iran (IR), and a score of 0.85 is assigned to signal the importance of the geo-restriction.

Strategy: If you run an international site, it may be necessary to restrict access to certain content based on local laws, such as for a project like Rotec Beds, which might restrict certain product offerings based on local regulations for selling medical equipment. Using these features allows you to tailor the visible content to each region, ensuring increased local relevance.

b) Age restrictions (AbuseiamAgeRestriction)

Beyond geo-restrictions, GoogleApi.ContentWarehouse.V1 talks about managing age restrictions with tools like AbuseiamAgeRestriction. This system makes it possible to limit access to sensitive content according to the age of the users, such as:

This means that only users 18 years and older can access this content, ensuring that the site complies with regulations regarding the dissemination of sensitive or inappropriate content for minors.

Strategy: For a site such as Stemregen, which deals with topics related to health or biotechnology, it may be a good idea to restrict certain content to adult audiences in order to protect the trust score and avoid penalties for spreading inappropriate content.

How Restriction Management Impacts SEO

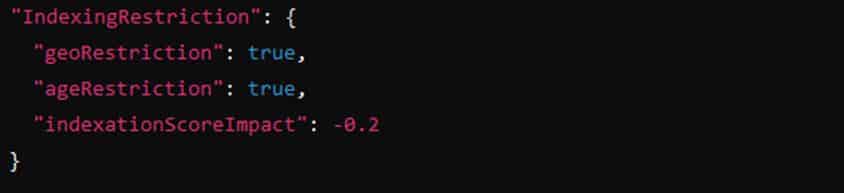

The documents in GoogleApi.ContentWarehouse.V1 indicate that Google takes content restrictions into account when indexing. If certain pages on a site are limited by geolocation or age, it can affect how those pages are indexed.

Here’s a code example that shows this consideration:

In this example, geographic and age restrictions are applied, resulting in a 0.2 reduction in the overall indexing score.

Strategy: For projects like La Canadienne Shoes, which targets international markets, it’s important to set up geo-restrictions in a way that avoids excluding SEO-critical pages while still adhering to local regulations.

b) Reputation and trust score

Google values the reputation of sites and their compliance with the rules The leak talks about abuse management tools such as AbuseiamAbuseType and AbuseiamVerdict that allow Google to categorize and filter sites based on their content.

Here’s sample code that shows how Google takes these elements into account:

Here, offensive content with high severity impacts the site’s trust score with a 0.5 degradation in its overall score.

Strategy: For companies like OLS Lawyers, where reputation is critical, using restrictions management tools to ensure that all content meets security and sensitivity standards helps protect domain authority and avoid penalties for delivering contentious content.

And the GEO?

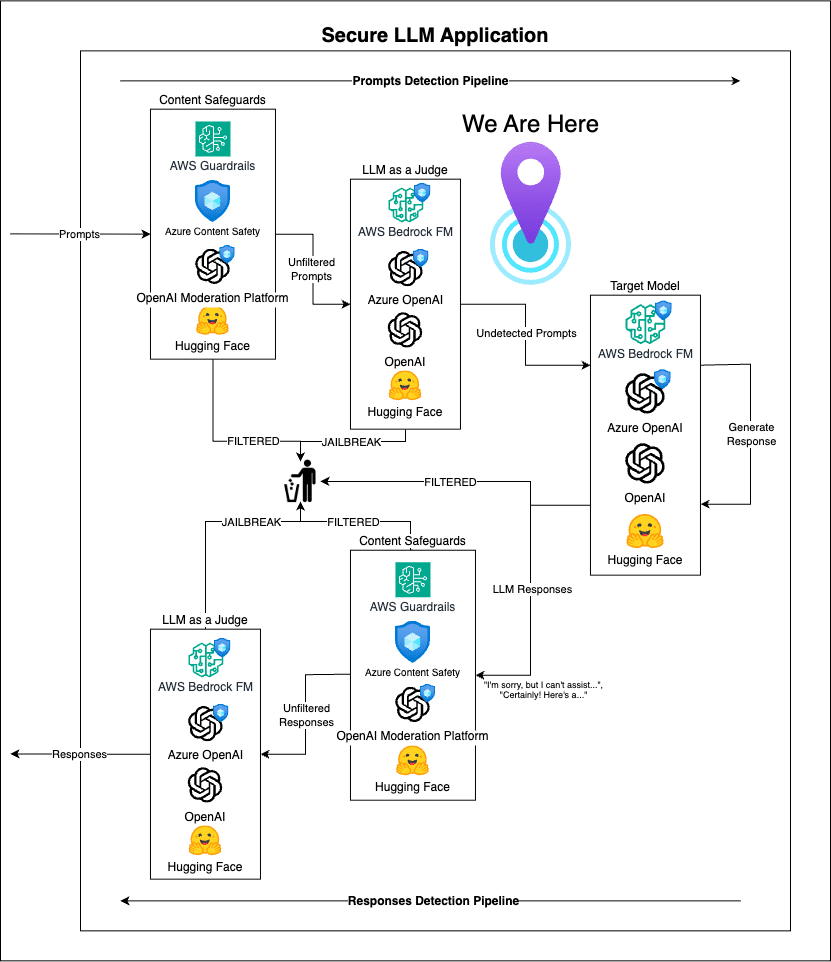

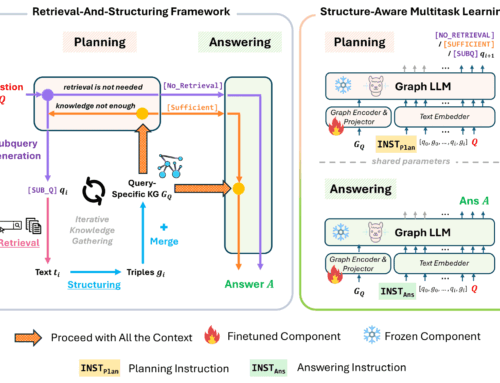

This framework illustrates the various phases of detection, filtering, and moderation that protect interactions between a user and a generative AI model.

1. Prompt Detection Pipeline

User queries are first filtered by a Content Protection layer (AWS Guardrails, Azure Content Safety, OpenAI Moderation, Hugging Face).

Then, a system called LLM as a Judge plays the role of arbitrator, capable of detecting attempts to circumvent or hack filters.

Only approved prompts make it to the Target Model (for example, AWS Bedrock FM, Azure OpenAI, OpenAI, Hugging Face) to produce a response.

2. Response Detection Pipeline

The model, after providing an initial response, goes through a layer of Content Safeguards to identify any content that might be sensitive, offensive, or non-compliant.

A second LLM as a Judge reviews and applies automatic moderation judgments.

If the response is considered appropriate, it is sent to the user. Otherwise, it is filtered or reformulated.

3. Essential Elements to Note

Dual workflows: one dedicated to prompts and the other to responses.

We use sets of safeguards (AWS, Azure, OpenAI, Hugging Face) to cross-reference the checks.

Jailbreak protection mechanisms to prevent malicious hijacking.

“We are here” in the diagram indicates the current step: validation by the LLM as an arbitrator before the prompt is sent to the recipient template.

Automated moderation with AbuseiamAbuseType

The AbuseiamAbuseType system in GoogleApi.ContentWarehouse.V1 allows Google to automatically categorize content based on its level of abuse, including potentially harmful or offensive content. Here’s an example of how it works:

In this example, content was flagged, and moderation action was taken due to moderate severity.

Strategy: For sites that generate a lot of user content, such as Cominar, which may include user reviews and feedback, it is recommended to implement automated moderation to quickly filter out inappropriate content, protecting domain authority and reducing the risk of penalties.

Automation of moderation verdicts (AbuseiamVerdict)

AbuseiamVerdict automates moderation verdicts, applying penalties or adjustments based on inappropriate content This is very convenient for sites with a lot of user-generated content.

Like what:

This site received a penalty verdict with a 0.4 reduction in its authority score due to non-compliant content.

Strategy: For projects like Randstad, it’s essential to actively monitor user-generated content (such as service reviews) to avoid automatic penalties for inappropriate content. A proactive moderation system ensures that pages remain compliant with Google’s standards.

Content Security and Domain Authority Protection

The documents show that Google could use security signals to judge domain authority. A domain with well-controlled limitations and strict moderation will be more likely to get a high authority score, which increases its visibility.

Here’s an example of a security score impacting domain authority:

With a contentSafetyScore of 0.9, the domain is perceived as safe, which helps maintain a good reputation in the SERPs.

Strategy: To maintain a good domain authority score, companies like La Canadienne Shoes should actively monitor any sensitive content and check what concerns the quality of their backlinks.

Penalties related to sensitive content

Non-compliant sites are exposed to heavy penalties: demotion in search results, de-indexing. The documents reveal that Google is taking automatic action against the dissemination of inappropriate content

You can proactively protect sensitive content, avoid penalties, and maintain a robust and reliable online presence. The data provided reveals that managing content restrictions is a pillar of a successful SEO strategy. By managing age, geolocation, and security restrictions well, you avoid penalties and build your site’s domain authority.

Using automated moderation tools and proactively managing sensitive content will ensure your site’s compliance and a positive user experience.

Leave A Comment